About the Database

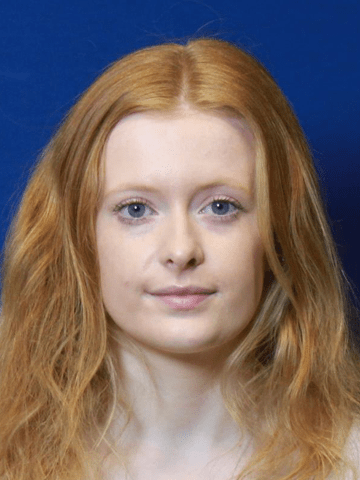

The UEA 3D Faces Database is created by the Person and Space Perception lab at the Department of Psychology, University of East Anglia (UEA), with support from a Royal Society research grant. The database includes a high-resolution 3D Face Models dataset, a Facial Images dataset, and a Dynamic Facial Expressions dataset of about 100 people (age ranges between 18 and 55 years old; 25 males, 70 females, 4 nonbinary; 75 of them are White and 25 are of Asian, Black, mixed or other ethnicity background).

The 3D face models dataset contains about 300 3D face models (3 for each person); the Facial Images dataset has about 1000 photographs showing faces in five facing directions (10 for each person); and the Dynamic Facial Expressions dataset contains about 2300 short videos of facial expressions of emotions (23 videos for each person).

The database can be used for free for non-commercial academic research purposes by researchers working at an officially accredited university or research institution.

To get access to and use the database, please download the license agreement, sign and return it to us at: thepsplab@gmail.com. Alternatively, you may sign the license agreement online here.

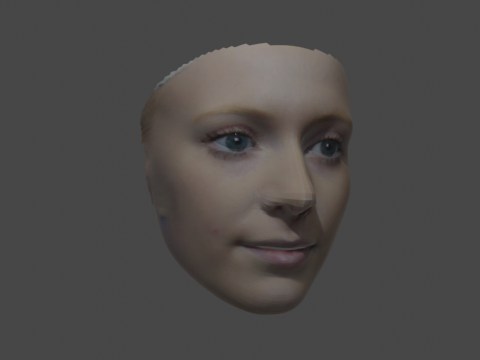

3D Face Models

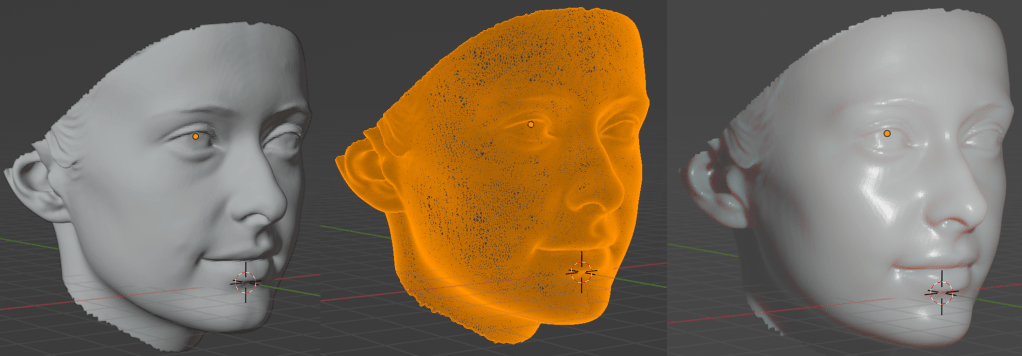

Each face has three sets of 3D faces models created using 3D face scanning, FaceGen Modeller software package, and Deep learning algorithms 3DDFA.

Photographs

Each face were shown in two sets of photographs, taking at different camera distance, in five facing directions (frontal, left and right profile, left and right three quarter view).

Dynamic Facial Expressions

Each has 23 videos showing both basic facial expressions (e.g., happy or sad) and scenario-induced facial emotions (e.g., when you win the first prize in a big competition).

3D Face Models Dataset

For each of the 100 people, we created three set of 3D face models using three different methods: The Scanning Set is created from scanning the faces using high resolution 3D scanner, the FaceGen Set is created using the FaceGen Modeller software package, and the Machine Learning Set is generated using 3D face reconstruction tools based on deep learning (e.g., 3DDFA).

The Scanning Set is created by scanning faces of people using Artec Eva 3D scanner (Artec 3D, Luxembourg). The scanned faces are processed using ArTec Studio v16, and the final 3D faces are stored in wavefront format (.obj). The Scanning Set provides the “ground truth” of the 3D structure and shape of the 100 faces in our database.

The FaceGen Set is created using FaceGen Modeller software package (Singular Inversions, Canada) based on three face images showing frontal, left profile and right profile views respectively. Both wavefront (.obj) and FaceGen (.fg) files are available, allowing for further manipulations along various demographical and emotion dimensions.

The 3DDFA Set is generated using a PyTorch implementation of 3DDFA-V2 (Guo et al., 2020, Code; Paper) based on a single, frontal view face image. We are working on generate more sets of 3D faces using other state-of-the-art machine learning implementations for 3D face reconstruction (such as DECA and Deep3DFace).

3D Face Models Dataset in Blender

In addition to the wavefront format (ie., .obj file), for each 3D face dataset, we also created Blender files with pre-imported face models that are roughly aligned with each other in terms of size and facing orientation. So these face models can be manipulated, animated, and rendered flexibly with the free and open source 3D creation suite Blender (Blender Foundation, the Netherlands).

For each of the following blender files, it contains all 100 individual face models in one set. So these blender files can be used with script to speed up and automate the processing and manipulation of multiple face models in a block.

Scanning Set pre-imported in Blender

FaceGen Set pre-imported in Blender

3DDFA Set pre-imported in Blender

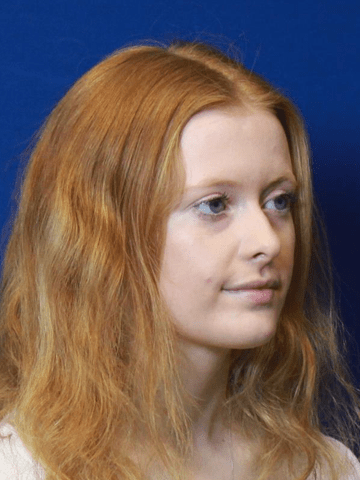

Facial Images Dataset

We have two sets of facial images in the database.

The Control Image Set is taken while people wearing an hairnet at a distance of 2 meters; whereas the Natural Image Set is taken without wearing hairnet at a distance of 3.2 meters. Each set contains facial images taken from five different orientations with a neural facial expression (i.e., frontal view, left and right profile views, left and right three quarter views). Face images from the Control Image Set are used to create 3D faces models for the FaceGen Set and the 3DDFA Set.

Dynamic Facial Expressions Dataset

We created two set of dynamic facial expressions of emotions for each of the 100 people in our 3D faces database. The Basic Facial Emotion Set contains recorded facial emotions displaying eight basic facial emotions (neutral, happy, sad, anger, fear, surprise, disgust, and pain). These videos are taken with the same camera setting for taking the Control Set of Facial Images.

The Elicited Facial Emotion Set contains recorded facial expressions induced by 15 social and emotional scenarios (with the aim to induce the above eight facial emotions). These 15 scenarios can be grouped into social contexts (e.g., winning the first prize in a big competition) and physical/physiological contexts (Seeing a snake slithering into a sleeping bag).

The Basic Facial Emotion Set contains recorded facial emotions displaying eight basic facial emotions.

Facial landmarks could be automatically detected using existing face analysis tools.

The Elicited Facial Emotion Set contains recorded facial expressions induced by social and emotional scenarios.

Facial landmarks could be automatically detected using existing face analysis tools.